Don't overestimate the importance of performance when choosing a stack for your Web project

Martin Pierret9 min read

Understand how a suitable backend language can make a server handle higher loads and help reduce operating costs – or not.

A few months ago, a colleague of mine gave a talk detailing how great his recent experience with Go had been. As a side-note, he mentioned that Web applications written in Go were able to handle way greater loads than those powered by its mainstream Web competitors. This intrigued me: if this performance gap does exist, what is it due to, and is it significant enough for me, a Web developer, to actually care about it?

After a bit of research, I was confident I had a better understanding of the factors at play in the performance of Web servers. Still, I was lacking some quantitative insight that would allow me, at last, to decide whether taking these differences into account would help me bring more value to my next project. This is when I chose to run a small home-made benchmark comparing Node, PHP, and Go – three technologies we use at Theodo – that I will use to draw the final conclusions of this post.

What is performance anyway?

The first step of my investigation was to get sure of what I meant by performance. Although there is no single definition of this concept, I am confident that the following is relevant to my needs as a Web developer:

Performance is the ability of a server to handle a great number of requests during a given period of time.

Thus, a server that performs well according to this definition will be able to run on less powerful hardware, reducing operating costs and environmental footprint.

It is also correlated to other definitions of performance you may have thought about. For example, the faster a server can respond to a request, the more requests per minute it should be able to handle.

What a performant server is made of

The next thing to realize when trying to understand how a particular technology may influence the performance of a server is that during the whole process of serving an HTTP request, time dedicated by the CPU to executing code actually written by the Web developer or by the Web framework developers is often extremely small compared to:

-

Time spent performing heavy computations, such as hashing a password, manipulating images, etc.

-

Time spent waiting for network calls to terminate. Our server is most likely communicating with distant APIs and databases, or with the local file system. This takes way more time than executing a few thousand instructions on a CPU.

As counterintuitive as it may seem at first, heavy computations will not make much of a difference here. “Slower” interpreted languages will typically call well-optimized binaries under the hood to handle those kinds of tasks.

[Summary]:

ticks total nonlib name

0 0.0% 0.0% JavaScript

72 63.7% 100.0% C++

1 0.9% 1.4% GC

41 36.3% Shared libraries

What will make a difference on the other hand is the way the server uses the waiting times during the processing of a request to start handling other requests concurrently.

Scheduling how the CPU will handle numerous concurrent connections to the server is a complicated problem that can be addressed in a number of ways:

-

Typical PHP servers will let OS threads handle the task. To put it simply, threads are the mechanism that operating systems use to give us the feeling that they are processing several tasks in parallel. Thus, threads are also natural candidates for managing the concurrent processing of HTTP requests – each thread being responsible for a single connection – even though this is not what they were primarily designed for. This unfortunately comes with some performance issues: each new thread consumes quite a lot of memory, and time spent switching from the execution of a thread to another is also non-neglectable.

-

Technologies such as Node or Go will keep the processing of all the requests in a single or few threads but will use their own user-land mechanisms, such as an event-loop (Node) or Goroutines (Go), to address the scheduling issue. Although these two mechanisms will make Web developers write very different-looking code, they have in common that they do not suffer from the same overhead as OS threads do when opening a new connection or when switching from a connection to another.

Benchmark

We now know that thread-based Web servers should in theory be outshined by their counterparts that use a more suitable concurrency model. But is the performance gap wide enough to be of any importance to developers? Let’s find out!

To carry my little experiment, I set up a server and wrote three different routes in PHP, Nodejs, and Go (more details about versions and my exact protocol in appendix):

-

When route 1 is fetched, the server hashes a string then returns OK.

-

When route 2 is fetched, the server performs a call to a (very) distant server, waits for the response, then returns OK.

-

Route 3 is a mix between route 1 and route 2: when it is fetched, there is a 1% chance that the server hashes a string, else it performs a call to the distant server. It emulates a server that performs a lot of I/O (database queries for instance), and some CPU-intensive tasks (such as hashing passwords to identify users).

To compare performance, we’ll put some load on every route, one by one, for every language, and use the following comparison criterion:

Reference load = the maximum number of HTTP requests a server can handle in a minute, such as 95% of requests take less than 3 seconds.

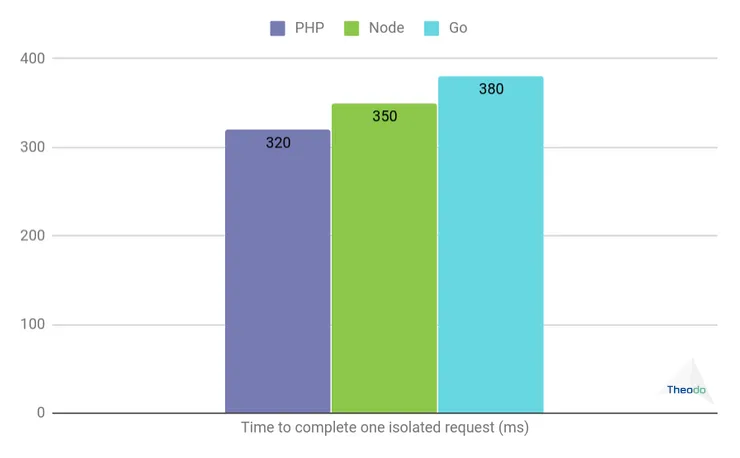

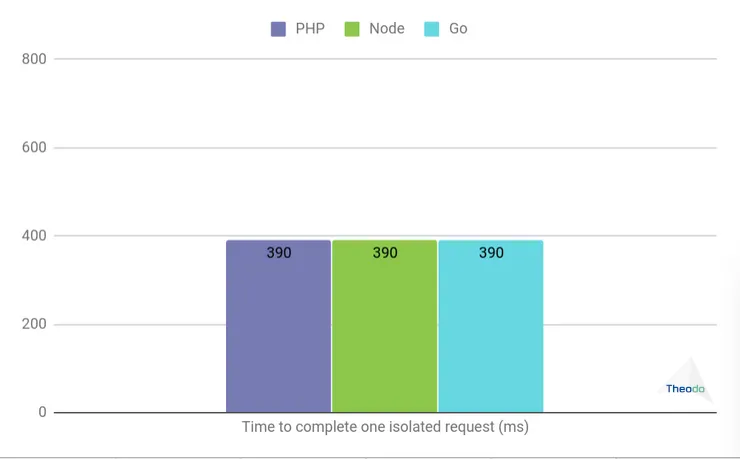

Route 1 falls into the heavy computations category, so we expect to see little difference between all three technologies:

The small variations we observe can probably be explained by slight differences in bcrypt implementations. An interesting fact to notice here is that the reference load could have been predicted by dividing 60 seconds (1 minute) by the time it takes to complete a single isolated request (PHP case: 60 / 0.320 = 188). This means that our server is simply processing the requests one after the other, and has no opportunity to parallelize anything since there is no waiting time while handling a request.

So far, our results match the theory. Great!

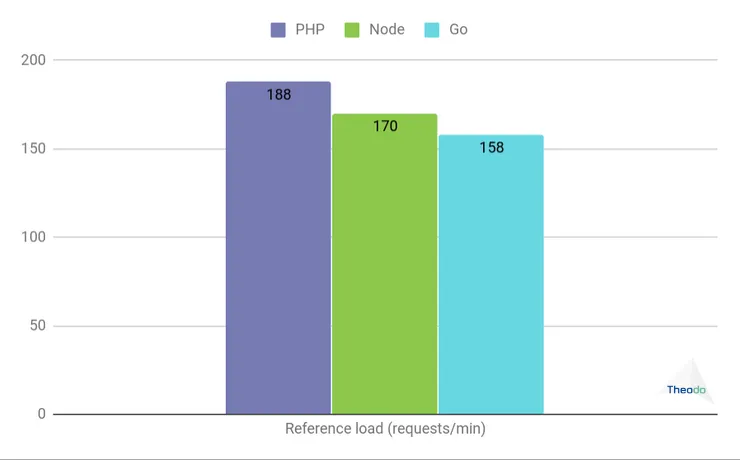

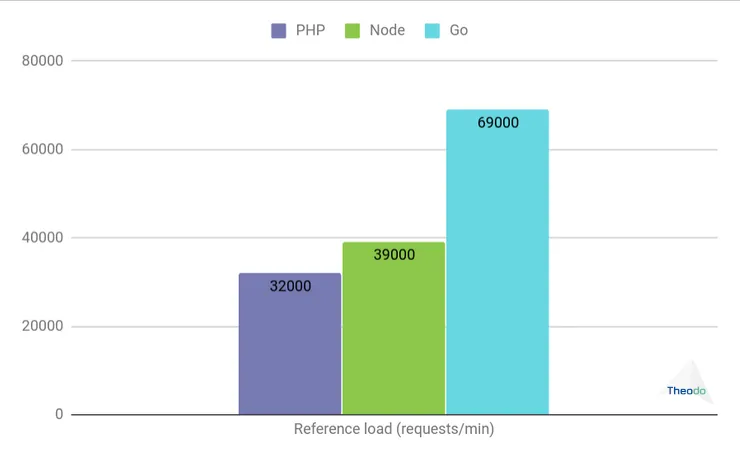

Route 2 is much more interesting since there is now plenty of time for the server to parallelize requests while it is waiting for an API call to return. Let’s see what happens in this case:

We notice that the time it takes to handle a single isolated request is exactly the same no matter the language, which seems natural since the bottleneck of our route is a very long network call. However, huge differences arise when we start putting some load on the server:

-

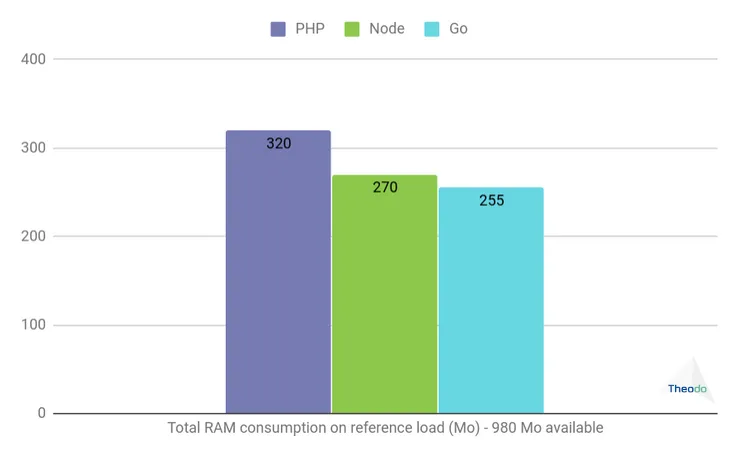

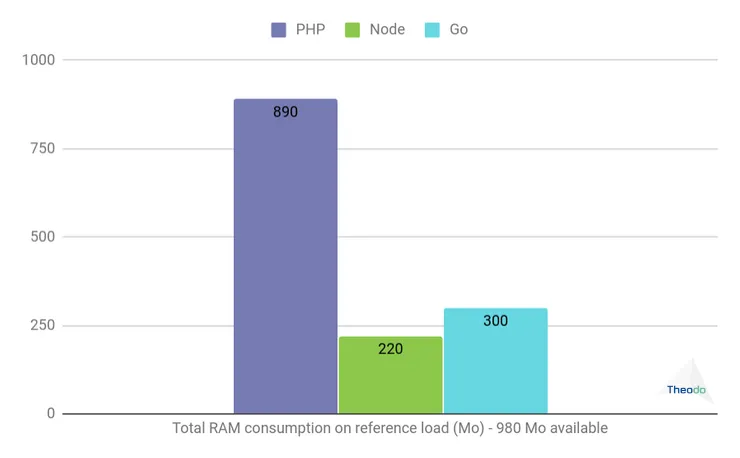

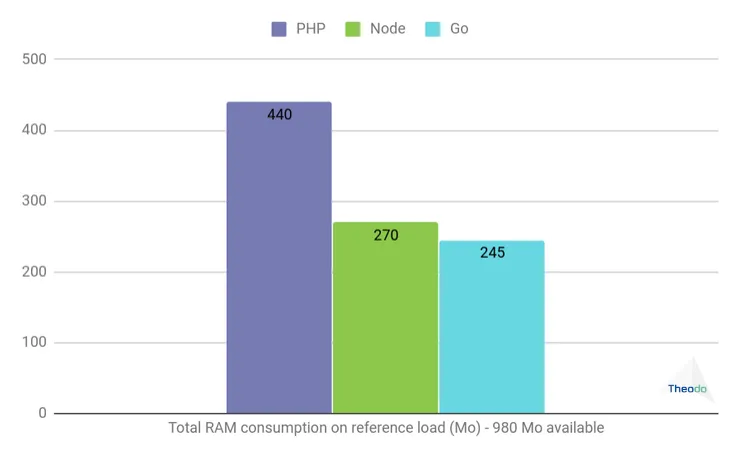

Our Node server can handle about 20% more load than its PHP counterpart, which is significant, but definitely not as spectacular as the 1-to-4 ratio in RAM consumption. This seems to validate that thread-based servers are more memory-intensive than servers that use a more specialized concurrency model!

-

Meanwhile, our Go server is able to keep a low memory footprint while handling almost twice as many requests per minute than the former two. I’ll admit that I have yet to find a satisfactory explanation for this result, and if you do have one, I’d be very grateful if you could take the time to share it with me!

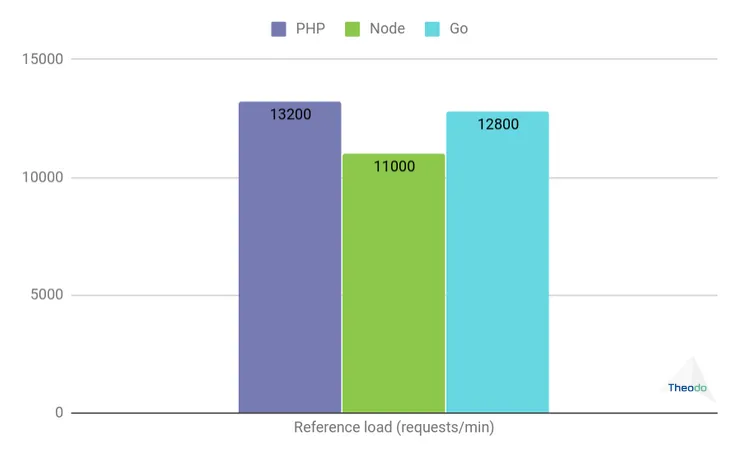

However, a real-world server could very well need to perform a few CPU-intensive operations among all these network calls, which is what I tried to emulate with route 3:

The most noticeable thing, in this case, is PHP’s memory consumption, and it is yet far from reaching any critical level. Reference loads on the other hand seem once again fairly similar.

Let’s talk about money

All that is very interesting, but what matters in the end is how much money you will have to spend to run your application.

As an example, let’s consider a large Symfony (PHP) project we are currently developing at Theodo. It handles about 50 million requests a day and runs on 32 machines with 8 cores and 32Gbytes of memory each. A similar infrastructure on AWS roughly costs $100,000 a year.

Our benchmark says that a more performant language could potentially divide infrastructure requirements by two. This would mean a $50,000 economy per year.

Conclusion

These results are telling me that choosing a suitable technology could have a measurable impact on operating costs if my service is mainly performing I/O, with close to zero CPU-intensive tasks. Otherwise, my application’s bottleneck will lie in the CPU-intensive code, and the technology I choose for writing my controllers will be of no importance whatsoever.

Let’s also keep in mind that there are many more practical considerations that should be taken into account first when choosing a production-grade technology, as they may have a more significant impact on a project success. For example, a rich and mature ecosystem should come with a greater development speed, and should make it easier to find experienced developers to hire.

Note that Web performance in general should definitely be a concern for whoever wants to build a successful product! For that, you can check our performance experts at Theodo [FR].