How to solve a network problem locally with Docker

Samuel Briole4 min read

You can follow this article with the related Github repository.

Introduction

During a mission I did at Theodo, I worked on a security issue about controlling the access to an application.

It included changing NGINX and Apache configurations.

When the issue was discovered, we tried to fix it directly on the production environment and we failed.

If you’re trying to make a change directly on the production environment, it probably won’t work and will break the application. You need to test it first.

What is my problem?

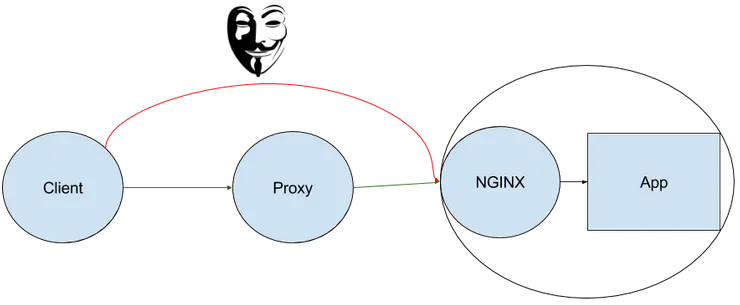

I want to restrict access to my NGINX server so that the client can’t access the NGINX server directly.

To solve this problem, I want to simulate the Proxy and the NGINX servers. I can either:

- Use several servers in a cluster

- Use virtual machines locally

- Use Docker locally

The cluster solution is very bad because it won’t work locally, and it costs money. The second solution is better but virtual machines are not easy to configure as a local network, and they take a lot of computing ressources, as the whole OS is running.

In contrast, Docker is a convenient tool to run several containers (which take a small computing ressource) and simulate a network of independent servers.

How to setup my containers using docker-compose?

This part requires docker-compose. It is a tool that creates several Docker containers with one command.

Create a docker-compose.yml file containing:

# docker-compose.yml

version: '2'

services:

app:

image: nginx:latest

container_name: app

proxy:

image: httpd:latest

container_name: proxy

depends_on:

- app

The depends_on block means that the app will start before proxy.

How to link the ports between my container and my computer?

Add some ports:

# docker-compose.yml

services:

app:

...

ports:

- "443:443"

...

proxy:

...

ports:

- "9443:443"

...

It links the ports like this: local-port:container-port

How to watch logs on local files and enable debug?

Link the log files in your volumes:

# docker-compose.yml

services:

app:

...

volumes:

- ./nginx/logs:/usr/share/nginx/logs

...

proxy:

...

volumes:

- ./proxy/apache2/logs:/usr/local/apache2/logs

...

It links directories or single files like this:

local/path:container/path

You can now monitor the logs in your editor at proxy/apache2/logs and nginx/logs, or using tail -f.

Set NGINX in debug mode using a custom command:

# docker-compose.yml

services:

app:

...

command: [nginx-debug, -g, daemon off;]

...

This will launch the nginx-debug service instead of the nginx service when you start this container.

How to link the configuration files between my container and my computer?

Add some volumes:

# docker-compose.yml

services:

app:

...

volumes:

- ./nginx/nginx:/etc/nginx:ro

- ./nginx/index.html:/usr/share/nginx/coucou/index.html:ro

...

proxy:

...

volumes:

- ./proxy/conf/httpd.conf:/usr/local/apache2/conf/httpd.conf:ro

- ./proxy/apache2/conf/sites-available:/usr/local/apache2/conf/sites-available:ro

...

The :ro at the end means read only for the container. In other words, you can only edit this file/directory outside the container.

How to solve my security problem?

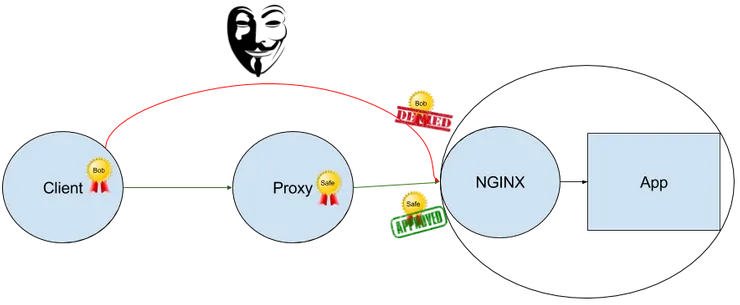

The solution to my problem is to enable SSL Client Authentication on the NGINX server, in order to allow only the requests coming from the proxy server:

Using the “fail & retry” process, I found the correct configuration:

- Modify the config files in

proxy/conf/andnginx/nginx/. - Launch the containers with

docker-compose up. - Test if it works. If needed, look at the log files.

- Stop the containers with

Ctrl + Cand start over.

The important part of the configuration I discovered is the following:

# proxy/apache2/conf/sites-available/appli.conf

...

SSLProxyEngine on

SSLProxyCheckPeerName off

SSLProxyMachineCertificateFile "/usr/local/apache2/ssl/proxy.pem" # sends the client certificate

...

# nginx/nginx/conf.d/default.conf

server {

...

# verifies the client certificate

ssl_verify_client on;

ssl_client_certificate /var/www/ca.crt; # Trusted CAs

# verifies the client CN.

# use $ssl_client_s_dn for nginx < 1.6:

if ($ssl_client_s_dn_legacy != "/C=FR/ST=France/L=Paris/O=Theodo/OU=Blog/CN=proxy/emailAddress=samuelb@theodo.fr") {

return 403;

}

...

}

You can reproduce it by cloning my repo: Client-SSL-Authentication-With-Docker and running docker-compose up.

If you request directly the NGINX server (https://localhost/), you get a 400 error, but if you request the Proxy server (https://localhost:9443/), you can access the sensitive data.

Conclusion

When repairing this security vulnerability using Docker, I was able to:

- give more visibility to my client on how I was handling this network problem, because I had a plan to break the problem.

- reassure my client because there was no danger for the production environment.

- increase my client’s statisfaction because I solved the problem quickly.