API Load Testing: Enhance Your Skills with Locust

Paul Royer4 min read

Load testing is a serious job and doing it poorly can have disastrous consequences for big companies’ production servers. But load testing is not only for experts in massive companies! You may want to quickly check that your server performances are appropriate, using only your own computer.

Locust is a perfect tool to use on such occasion:

- A great user interface, allowing you to launch tests and monitor results in real-time, directly from your web browser.

- Writting scripts in Python, perhaps the most popular language according to, for example, the top programming language IEEE study.

- Installable in seconds, free and open source

Start load testing with Locust!

First, you need an API to load test. Let’s say you have an amusement park application. It’s running on http://localhost:8080 and exposing the route GET /bookings with two query parameters: age and date.

To begin with Locust, you basically need a dozen seconds. With pip already installed, just run:

pip3 install locust

Then, you need to write a locust file, describing how users will interact with your API. Let’s create a basic one, under the name locustfile.py:

from locust import HttpUser, between, task

class User(HttpUser):

wait_time = between(1, 2)

@task

def get_booking(self):

age = 19

date = "2024-06-08"

self.client.get(f"/bookings?age={age}&date={date}")

The User class represents the expected behaviour of a user. For each user created, Locust will execute on a loop all the tasks defined. In this simple case, we have only one task and the requested URL will be always the same as age and date variables are hardcoded. We just set a pause of 1 to 2 seconds between each user loop.

Set-up is done, let’s swarm into our API using Locust! In your terminal, in the folder where your locustfile is located, simply run:

locust

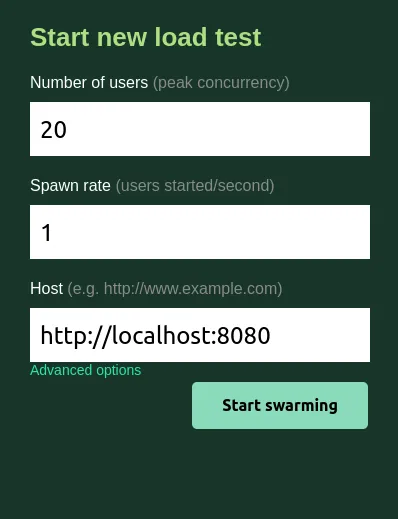

On your browser, head to http://0.0.0.0:8089/ and fill up the three parameters before launching the test:

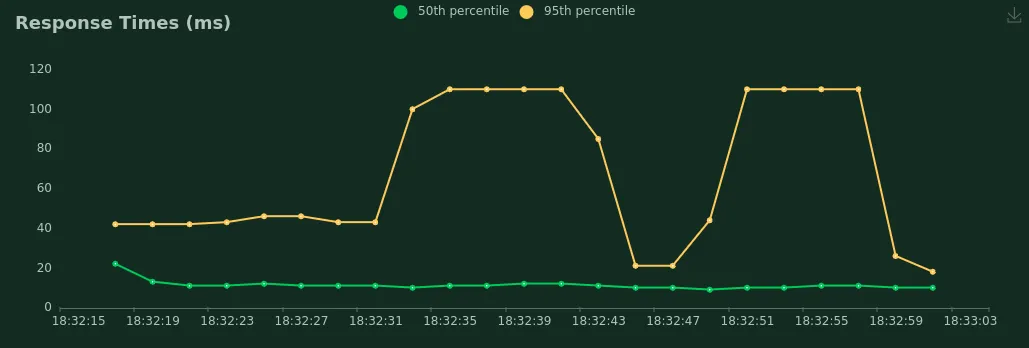

Press “Start swarming” and tadaa! ✨ We can now observe the response time of our API fluctuate in real-time:

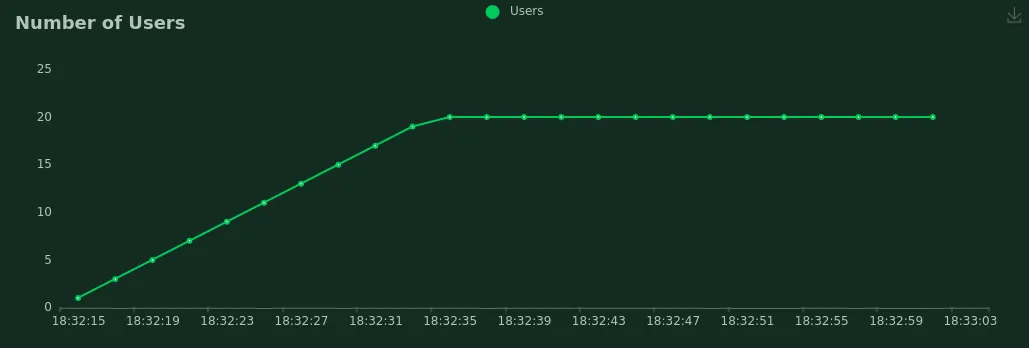

As the number of users grows:

Once the test is done, you can download a report in HTML format. Allowing you to quickly share the results visually with your team.

Benefiting from Locust plugins to deliver more value

Here, we load-tested our API in a basic way (one endpoint, always the same request parameters). We can do a lot more, and most of the time we must!

Let’s say your API is enjoying some success. To handle the increasing load, you implement some cache on your endpoint, allowing fast response to common requests. It’s nice, but if you keep having only one request when load testing, cache will handle all your requests. So your server will be able to accept way more requests than in a real situation, where all requests could be different and cache would miss some of them.

To solve this issue, we should vary the parameters of the requests we send, that is age and date. Here is one of the many possibilities, using the CSV reader plugin for Locust:

First, we need to create a CSV file listing all the test cases we want to use, for example in a file named booking-param.csv:

12, 2024-06-08

20, 2024-06-08

12, 2024-06-09

20, 2024-06-09

You can use a little script to build this CSV by making the cartesian product of all your parameters’ possible values.

Then, we modify the locust file to import our parameters from the CSV file:

from locust import HttpUser, between, task

from locust_plugins.csvreader import CSVReader # add import to the CSV reader plugin

booking_param_reader = CSVReader("booking-param.csv") # read the CSV you created

class User(HttpUser):

wait_time = between(1, 2)

@task

def get_booking(self):

booking_params = next(booking_param_reader)

age = booking_params[0]

date = booking_params[1]

self.client.get(f"/bookings?age={age}&date={date}", name="/bookings")

Relaunch your script and each task will be executed with a different line of the CSV file you created, making each request different (if you send more requests than there are lines in your CSV file - it will quickly happen - further requests will be sent starting over from the start of the file).

Note that here we add a name argument to our get method: Locust will group all these requests in one line in its report, which would prove very convenient for analysis!

Stay tuned: Locust is evolving

In the 2020 exhaustive comparison of load testing software (done by a k6 engineer 😉), Locust was pinpointed for its low performance and the clumsiness of running it locally on several CPU cores. Since then Locust can run a process for each of your cores with a single command:

locust --processes -1

Although Locust was created in 2011, it keeps gaining functionality, making it worth giving it a (re)-try!