Nightly End-to-End & Performance Tests with Flashlight

Mo Khazali7 min read

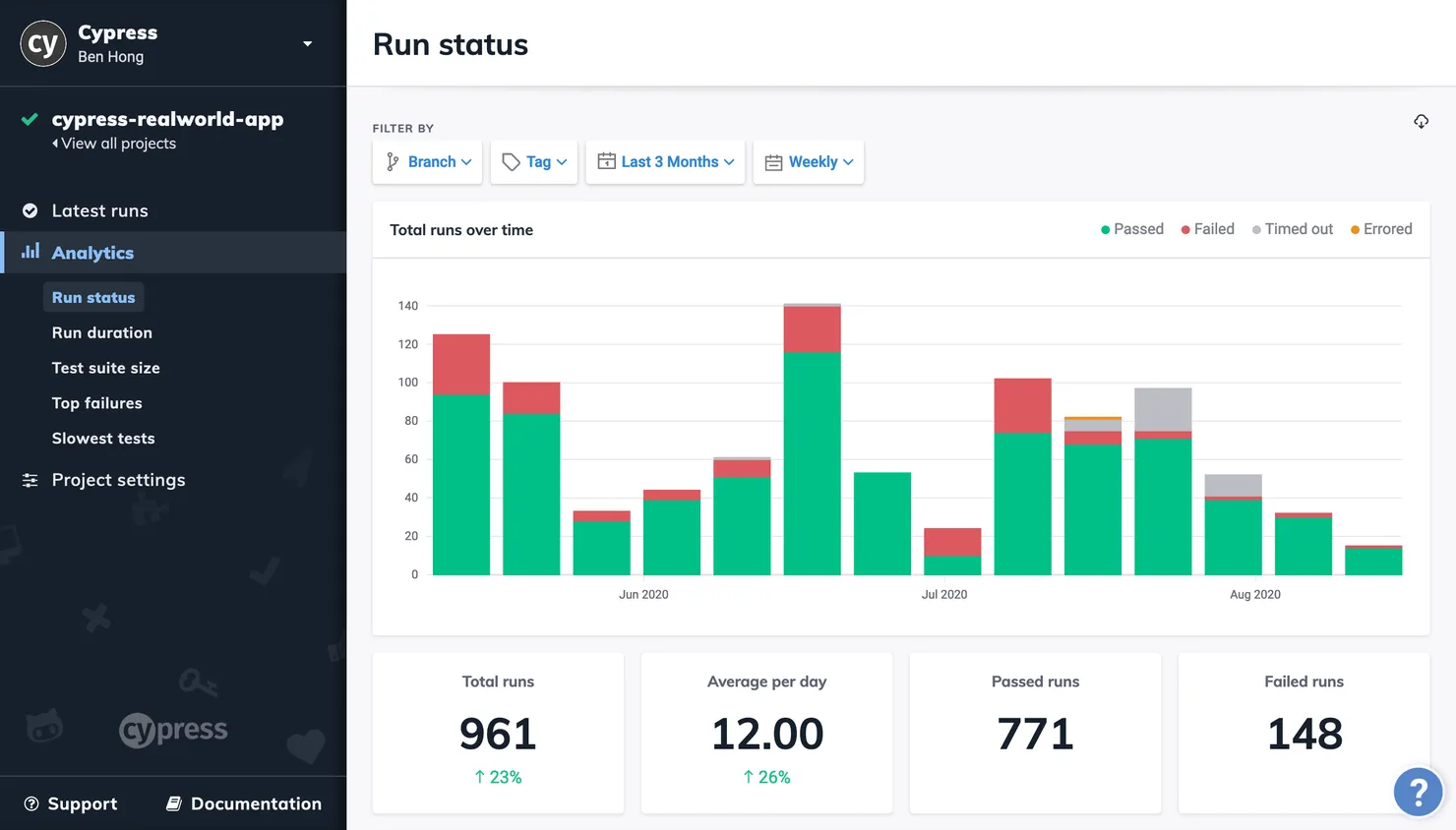

Several years ago, I was working on a web project that had quite a number of critical web flows that needed to be regularly tested to make sure that there weren’t any regressions from functionality or performance across the app. On the web, this was pretty easy. We had nightly Cypress tests running at 12AM every night and generating reports for us to check in the morning.

This gave us a pretty good look into the health of the project at any point, and would allow us to pinpoint the points in time when performance regressions were introduced.

In the mobile world, for the longest time, there wasn’t a similar tool to be able to easily run automated E2E and performance tests.

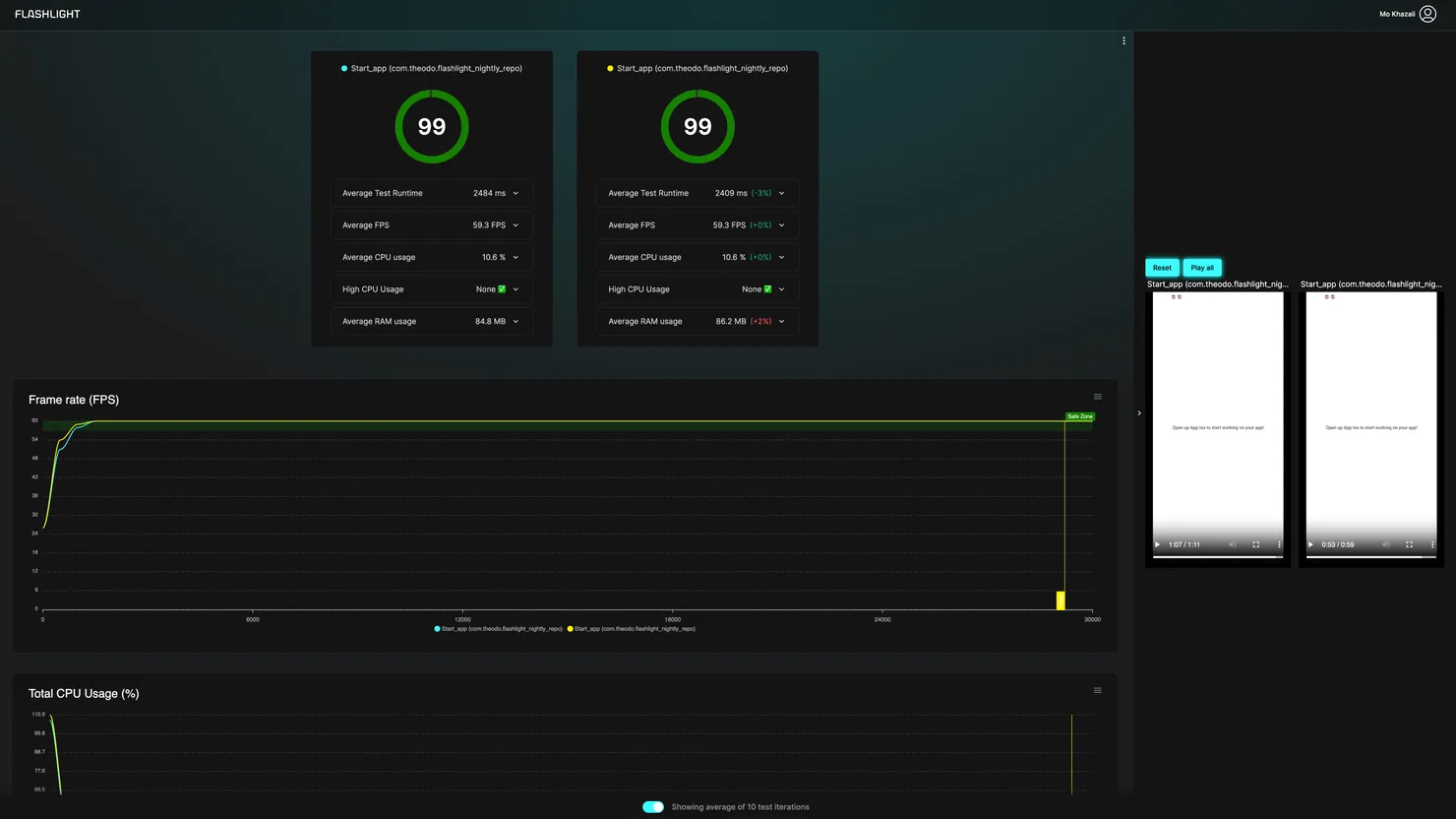

Recently, Alexandre Moureaux, a colleague of mine from our mobile team in France (BAM) has been working on Flashlight - it allows you to test the performance of your mobile app by simply passing in an APK and some testing instructions (using an E2E testing framework).

Thanks to Flashlight, running similar nightly tests is very much possible, and we finally took that step with our recent project at Theodo. This article will go over our thinking, how we decided the frequency of our tests, limitations, and covers how you can set it up on your own project.

Our E2E Testing Flow

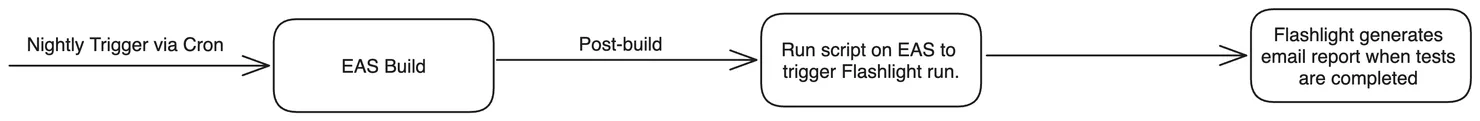

The workflow is actually quite simple, and it’s split across Github Actions and Expo. Using a scheduled GitHub workflow, we trigger a build of our staging app on Android every night at 12am. This is a release build that is set to internal distribution.

After the build is done on EAS, it runs the post-build hook, which uploads the build onto Flashlight and triggers a basic E2E test.

How often to test?

A big question we faced was around how often we wanted to run our performance test. As with all E2E tests, they can end up being costly and take a while to run. On average, a build of our staging app takes around 20 mins on EAS for Android. Combine this with the time it takes to run the Flashlight test (sometimes, you’ll have to wait in a queue) and you’re looking at 25/30 minutes for each full test.

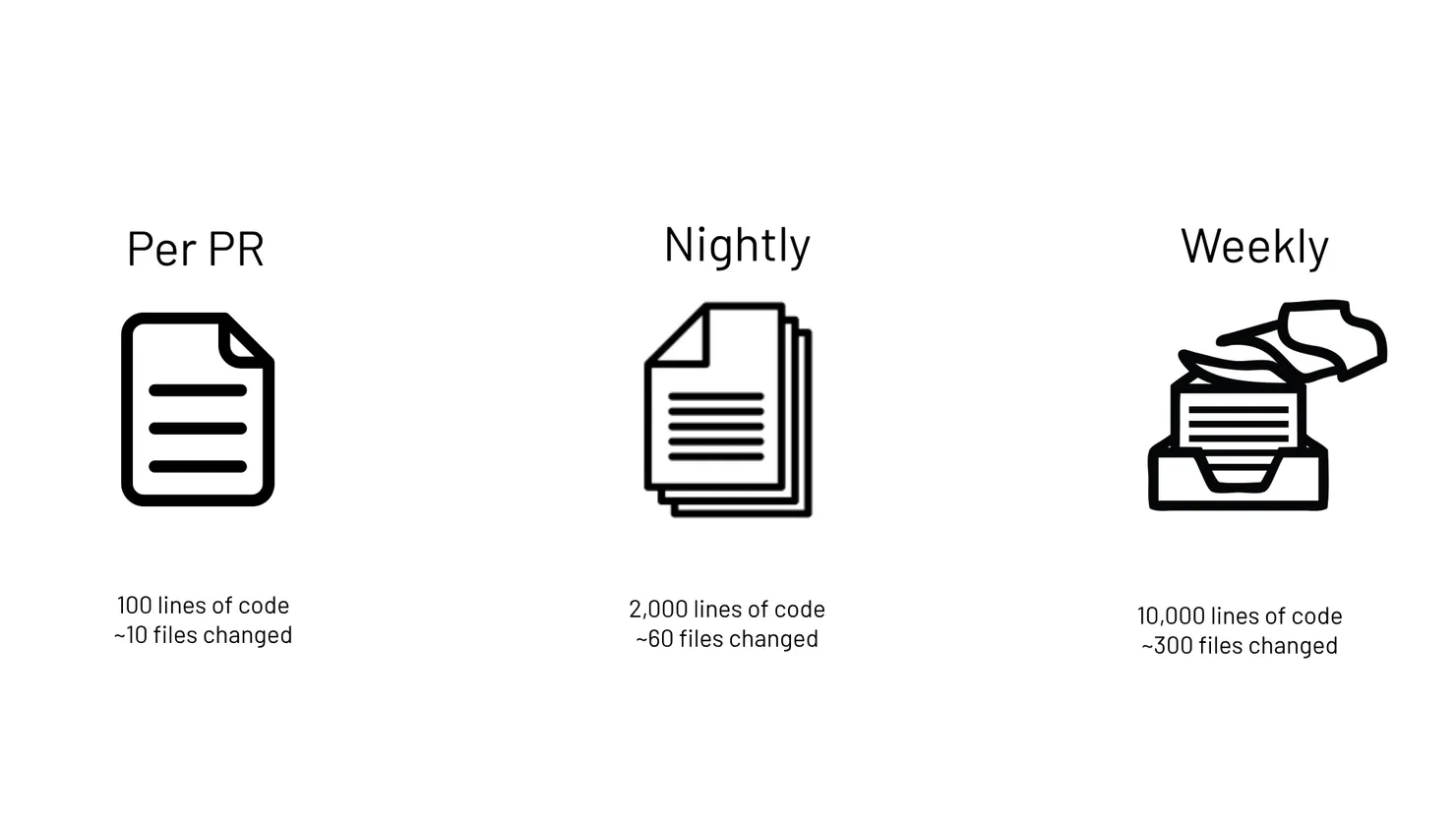

If we test too much, it slows down the team and becomes a bottleneck. We were very conscious of not blocking each PR with E2E tests, as it would add a minimum of 25/30 minutes to our lead time and make the feedback loop much slower.

On the other hand, if we test too little, we will have a harder time pinpointing things when regressions do happen. On average, per week, we’re changing approximately 10,000 lines of code in around ~300 files (not the best metric, but just to give you an idea of scale). If we made our tests weekly, given the size of our team and the speed of development, we felt it would make it harder to debug a full week’s changes.

As always, it depends on your team and how quickly you’re moving. In our case, we have a team of 15 developers, all working on the same project, trying to have a quick turnaround for an ambitious universal app. We’re landing around ~10 medium/large PRs introducing new features every single day. Ultimately, we found nightly runs to be the least intrusive approach, that could still give us the right visibility if something had caused regressions the previous day.

Limitations

Now, there are still a number of limitations with this approach that need to be considered and factored for.

No iOS Testing (yet…)

As of November 2023, Flashlight doesn’t support iOS testing. The tests are all running on low-end Android devices. This is fine for our use-case since the majority of our user base will be based in India (where over 90% of the market share is Android). On top of that, iOS devices typically come with more powerful internals in comparison to Android phones on the market. As a result, most of the performance issues we’ve dealt with are typically on Android devices.

However, not testing iOS means that there is still a surface area of possible regressions that aren’t being covered. This should change in the very near future, with Flashlight’s iOS support coming soon.

Using OTA Updates instead of builds?

Currently, we build our apps every night. This adds an additional overhead (and build costs of around $30 per month, which isn’t the biggest deal). You might want to use updates instead of builds, but this will require a bit more ingenuity because the first run of each test will always have an overhead of the update process being added (which can skew performance negatively).

You can circumvent this by disregarding the first test run each night, but that stops you from using the prebuilt dashboard to do your analysis. Hence, it’s an added effort to consider.

Setup Steps

- Create a Maestro E2E test for you app (this can be as simple as starting up the app, although testing an actual user flow can give you a better view of what’s happening):

appId: com.theodo.flashlight-nightly-repo

---

- launchApp

- assertVisible: "Open up App.tsx to start working on your app!"

- Define a

previewbuild profile for your application ineas.jsonthat will be used for the test to generate an internal distribution build (this shouldn’t be adevelopmentClient):

{ ...,

"preview": {

"distribution": "internal"

},

... }

- Setup a cron running on your project repository to trigger a build on EAS every night (or your chosen frequency). You’ll need to generate an API on Expo to use with your Github actions. Here’s an example of what it can look like.

name: Nightly E2E Tests

on:

workflow_dispatch:

schedule:

# * is a special character in YAML so you have to quote this string

# currently set to run at the end of each day

- cron: "0 0 * * *"

jobs:

build:

name: Build

runs-on: ubuntu-latest

steps:

- name: Check for EXPO_TOKEN

run: |

if [ -z "${{ secrets.EXPO_TOKEN }}" ]; then

echo "You must provide an EXPO_TOKEN secret linked to this project's Expo account in this repo's secrets. Learn more: https://docs.expo.dev/eas-update/github-actions"

exit 1

fi

- name: Checkout repository

uses: actions/checkout@v3

- name: Setup Node

uses: actions/setup-node@v3

with:

node-version: 18.x

cache: npm

- name: Setup EAS

uses: expo/expo-github-action@v8

with:

eas-version: latest

token: "${{ secrets.EXPO_TOKEN }}"

- name: Install dependencies

run: npm install

- name: Build on EAS

run: eas build --profile preview --platform android --non-interactive --no-wait

- Create an EAS hook to run on Expo’s servers after your build is successfully done and add it to your

package.json. You’ll need to generate an API key on Flashlight Cloud, and add this to your Expo project’s secrets (since the post-build script will run on Expo’s servers).

if [ "$EAS_BUILD_PROFILE" = "preview" ] && [ "$EAS_BUILD_PLATFORM" = "android" ]; then

curl https://get.flashlight.dev/ | bash

export PATH="$HOME/.flashlight/bin:$PATH"

flashlight cloud --testName "Start app" --app android/app/build/outputs/apk/release/app-release.apk --test e2e/start.yml --apiKey $FLASHLIGHT_API_KEY --duration 30000

fi

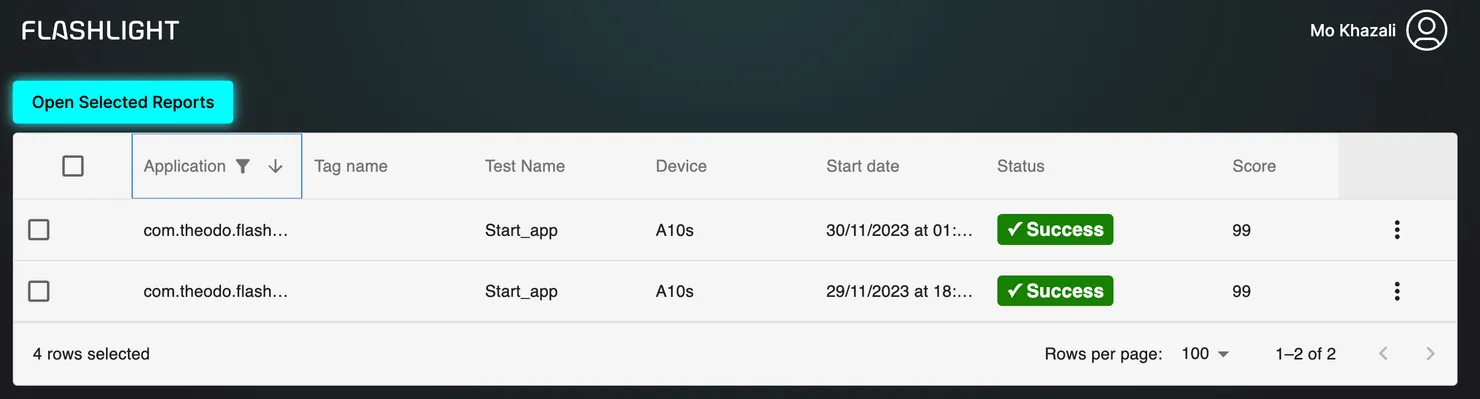

- Watch the magic happen. You’ll see your tests automatically run every single night (and receive an email from Flashlight).

Note: you’ll need to run the first build of your android app outside of CI without the --non-interactive flag, since you’ll need to generate the Android Keystore. All subsequent builds can be triggered from the CI

Reproduction Repo

I’ve created an example repo with the setup here, which you can refer to.

Acknowledgments

Big shout-out to Alexandre Moureaux, for creating Flashlight and also reviewing this article. Flashlight is such an amazing tool and has made performance testing on mobile so much simpler.

I’d also like to thank Francisco Costa, who’s the tech lead on the current project which this setup is based on.

Feel free to reach out

Feel free to reach out to me on Twitter @mo__javad. 🙂